- Ofcom sets out more than 40 practical steps that services must take to keep children safer

- Sites and apps must introduce robust age-checks to prevent children seeing harmful content such as suicide, self-harm and pornography

- Harmful material must be filtered out or downranked in recommended content

Tech firms must act to stop their algorithms recommending harmful content to children and put in place robust age-checks to keep them safer, under detailed Ofcom plans today.

These are among more than 40 practical measures in our draft Children’s Safety Codes of Practice, which set out how we expect online services to meet their legal responsibilities to protect children online.

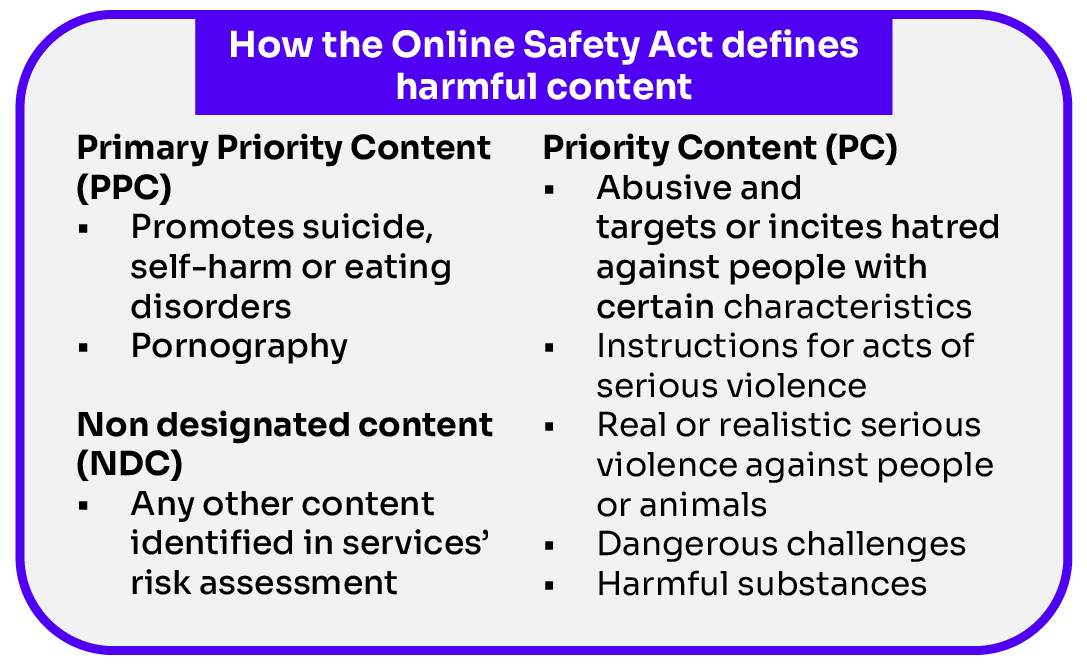

The Online Safety Act imposes strict new duties on services that can be accessed by children, including popular social media sites and apps and search engines. Firms must first assess the risk their service poses to children and then implement safety measures to mitigate those risks.[1]

This includes preventing children from encountering the most harmful content relating to suicide, self-harm, eating disorders, and pornography. Services must also minimise children’s exposure to other serious harms, including violent, hateful or abusive material, online bullying, and content promoting dangerous challenges.

Safer by design

Taken together, our draft Children’s Safety Codes – which go much further than current industry practice - demand a step-change from tech firms in how UK children are protected online. They make clear that, to protect children, platforms’ fundamental design and operating choices must be safer. We expect services to:

1. Carry out robust age-checks to stop children accessing harmful content

Our draft Codes expect much greater use of highly-effective age-assurance[2] so that services know which of their users are children in order to keep them safe.

In practice, this means that all services which do not ban harmful content, and those at higher risk of it being shared on their service, will be expected to implement highly effective age-checks to prevent children from seeing it. In some cases, this will mean preventing children from accessing the entire site or app. In others it might mean age-restricting parts of their site or app for adults-only access, or restricting children’s access to identified harmful content.

2. Ensure that algorithms which recommend content do not operate in a way that harms children

Recommender systems – algorithms which provide personalised recommendations to users – are children’s main pathway to harm online. Left unchecked, they risk serving up large volumes of unsolicited, dangerous content to children in their personalised news feeds or ‘For You’ pages. The cumulative effect of viewing this harmful content can have devasting consequences.

Under our proposals, any service which operates a recommender system and is at higher risk of harmful content must also use highly-effective age assurance to identify who their child users are. They must then configure their algorithms to filter out the most harmful content from these children’s feeds, and reduce the visibility and prominence of other harmful content.

Children must also be able to provide negative feedback directly to the recommender feed, so it can better learn what content they don’t want to see.

3. Introduce better moderation of content harmful to children.

Evidence shows that content harmful to children is available on many services at scale, which suggests that services’ current efforts to moderate harmful content are insufficient.

Over a four-week period, 62% of children aged 13-17 report encountering online harm[3], while many consider it an ‘unavoidable’ part of their lives online. Research suggests that exposure to violent content begins in primary school, while children who encounter content promoting suicide or self-harm characterise it as ‘prolific’ on social media, with frequent exposure contributing to a collective normalisation and desensitisation.[4]

Under our draft Codes, all user-to-user services must have content moderation systems and processes that ensure swift action is taken against content harmful to children. Search engines are expected to take similar action; and where a user is believed to be a child, large search services must implement a ‘safe search’ setting which cannot be turned off must filter out the most harmful content.

Other broader measures require clear policies from services on what kind of content is allowed, how content is prioritised for review, and for content moderation teams to be well-resourced and trained.

Ofcom will launch an additional consultation later this year on how automated tools, including AI, can be used to proactively detect illegal content and content most harmful to children – including previously undetected child sexual abuse material and content encouraging suicide and

We want children to enjoy life online. But for too long, their experiences have been blighted by seriously harmful content which they can’t avoid or control. Many parents share feelings of frustration and worry about how to keep their children safe. That must change.

In line with new online safety laws, our proposed Codes firmly place the responsibility for keeping children safer on tech firms. They will need to tame aggressive algorithms that push harmful content to children in their personalised feeds and introduce age-checks so children get an experience that’s right for their age.

Our measures – which go way beyond current industry standards – will deliver a step-change in online safety for children in the UK. Once they are in force we won’t hesitate to use our full range of enforcement powers to hold platforms to account. That’s a promise we make to children and parents today.

Dame Melanie Dawes, Ofcom Chief Executive

When we passed the Online Safety Act last year we went further than almost any other country in our bid to make the UK the safest place to be a child online. That task is a complex journey but one we are committed to, and our groundbreaking laws will hold tech companies to account in a way they have never before experienced.

The government assigned Ofcom to deliver the Act and today the regulator has been clear; platforms must introduce the kinds of age-checks young people experience in the real world and address algorithms which too readily mean they come across harmful material online. Once in place these measures will bring in a fundamental change in how children in the UK experience the online world.

I want to assure parents that protecting children is our number one priority and these laws will help keep their families safe. To platforms, my message is engage with us and prepare. Do not wait for enforcement and hefty fines – step up to meet your responsibilities and act now.

Michelle Donelan, Technology Secretary

Ofcom’s new draft code of practice is a welcome step in the right direction towards better protecting our children when they are online.

Building on the ambition in the Online Safety Act, the draft codes set appropriate, high standards and make it clear that all tech companies will have work to do to meet Ofcom’s expectations for keeping children safe. Tech companies will be legally required to make sure their platforms are fundamentally safe by design for children when the final code comes into effect, and we urge them to get ahead of the curve now and take immediate action to prevent inappropriate and harmful content from being shared with children and young people.

Importantly, this draft code shows that both the Online Safety Act and effective regulation have pivotal roles to play in ensuring children can access and explore the online world safely.

We look forward to engaging with Ofcom’s consultation and will share our safeguarding and child safety expertise to ensure that the voices and experiences of children and young people are central to decision-making and the final version of the code.

Sir Peter Wanless, CEO at the NSPCC

I welcome the publication of the draft Children’s Code, and, as a statutory consultee, I will be considering whether its proposed measures meet what children and Parliament have said needs to change. It is my hope that its implementation alongside other protections in the Online Safety Act will mark a significant step forward in the ongoing effort to safeguard children online, which I have long called for. Children themselves have consistently told me they want and need better protections to keep them safe online, most recently in their responses to The Big Ambition, which engaged with 367,000 children.

There is always more work to be done to ensure that every child is safe online. Protections in the Online Safety Act must be implemented swiftly, with effective age assurances, default safety settings and content moderation to prevent children from accessing platforms underage and keeping them safe online as they explore, learn and play. I will continue to work with Ofcom, policy makers, government, schools and parents to ensure that children safe kept safe online.

Dame Rachel de Souza, Children’s Commissioner for England

Stronger senior accountability and support for children and parents

Our draft Codes also include measures to ensure strong governance and accountability for children’s safety within tech firms. These include having a named person accountable for compliance with the children’s safety duties; an annual senior-body review of all risk management activities relating to children’s safety; and an employee Code of Conduct that sets standards for employees around protecting children.

A range of other proposed safety measures focus on providing more choice and support for children and the adults who care for them. These include having clear and accessible terms of service, and making sure that children can easily report content and make complaints.

Support tools should also be provided to give children more control over their interactions online – such as an option to decline group invites, block and mute user accounts, or disable comments on their own posts.

A reset for children’s safety

Building on the child protection measures in our draft illegal harms Codes of Practice and our industry guidance for pornography services published last year, we believe we will make a significant difference to children’s online experiences.

Children’s voices have been at the heart of our approach in designing the Codes. Over the last 12 months, we’ve heard from over 15,000 youngsters about their lives online and spoken with over 7,000 parents, as well as professionals who work with children. As part of our consultation process, we are holding a series of focused discussions with children from across the UK, to explore their views on our proposals in a safe environment. We also want to hear from other groups including parents and carers, the tech industry and civil society organisations – such as charities and expert professionals involved in protecting and promoting children’s interests.

Subject to responses to our consultation, which must be submitted by 17 July, we expect to publish our final Children’s Safety Codes of Practice within a year. Services will then have three months to conduct their children’s risk assessments, taking account of our guidance, which we have published in draft today.

Once approved by Parliament, the Codes will come into effect and we can begin enforcing the regime. We are clear that companies who fall short of their legal duties can expect to face enforcement action, including sizeable fines.

A ‘quick guide’ to our consultation proposals is available, along with an ‘at a glance’ summary of our proposed Codes measures.

Notes to editors:

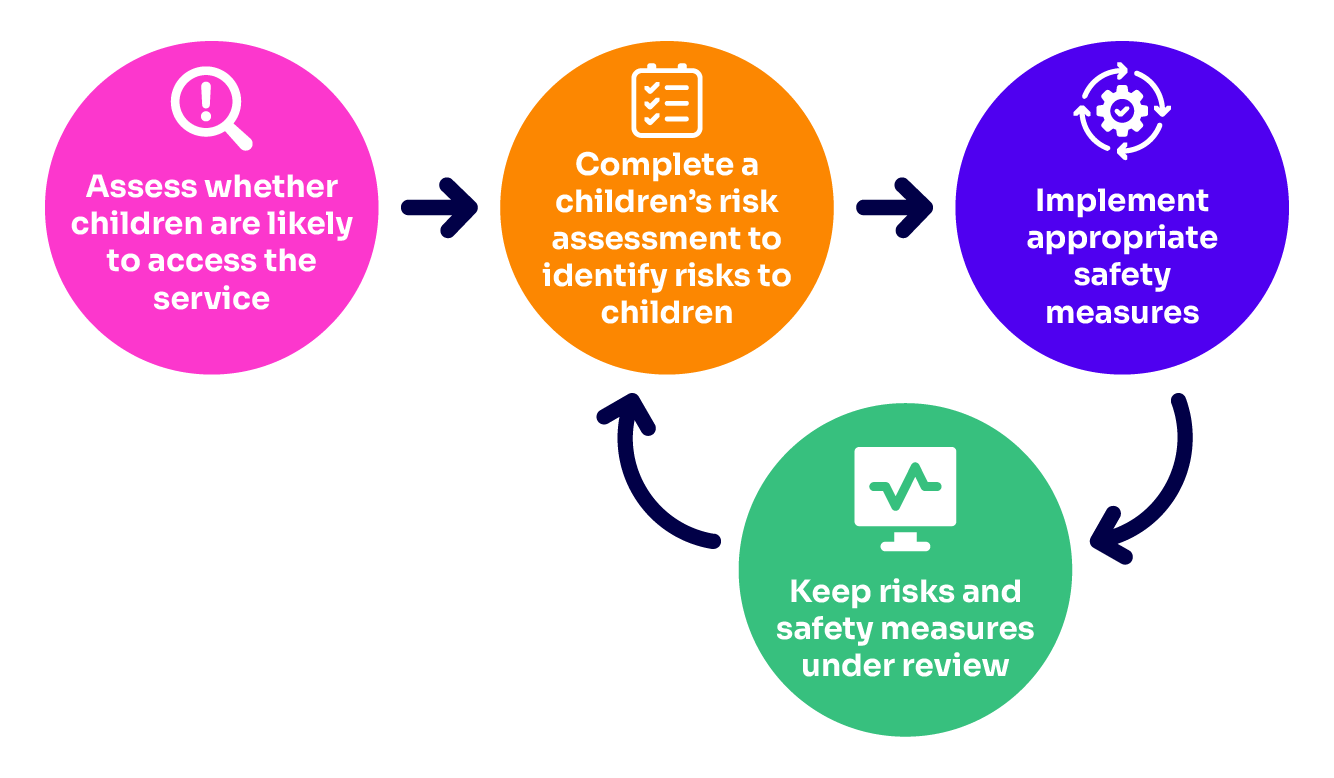

1. A core objective of the Online Safety Act is that online services are designed and operated in a way that affords a higher standard of protection for children than for adults. Under the Act, services must first assess whether children are likely to access their service – or part of it. This involves completing a ‘children’s access assessment’. We have today published our draft Children’s Access Assessment Guidance designed to help services comply. Second, if services are likely to be accessed by children, they need to complete a ‘children’s risk assessment’ to assess the risk they pose to children. We have published draft Children’s Risk Assessment Guidance to help services with this process. Services can also refer to our draft Children’s Register of Risk for more information on how risks of harm to children manifest online, and our draft Harms Guidance which sets out examples and the kind of content that Ofcom considers to be content harmful to children. Services must then implement the safety measures from our Codes to mitigate risks, and subject their approach to children’s safety under continual review.

2. Age-assurance encompasses age verification or age estimation techniques. By “highly effective”, we mean that it must be technically accurate, robust, reliable, and fair. Examples of age assurance methods that could be highly effective if they meet the above criteria include photo-ID matching, facial age estimation, and reusable digital identity services. Examples of age assurance methods that are not capable of being highly effective include payment methods which do not require the user to be over 18, self declaration of age, and general contractual restrictions on the use of the service by children.

3. https://www.ofcom.org.uk/research-and-data/online-research/internet-users-experience-of-harm-online

4. https://www.ofcom.org.uk/online-safety/protecting-children/eating-disorders-self-harm-and-suicide

5. We will also publish specific proposals later this year on our use of ‘tech notices’ to require services, in certain circumstances, to use accredited technologies to deal with specific types of illegal content, including child sexual abuse material whether communicated publicly or privately. We expect this to cover: guidance on how we would use these powers; our advice to government on the minimum standards of accuracy; and our approach to accrediting technologies.

We are also publishing new research today inform and underpin our work as the UK’s online safety regulator: