Despite the many benefits of being online, most of us come across potentially harmful material. Ofcom research found that six in 10 users say they encountered at least one piece of harmful content online in the previous four weeks alone. In some cases the material is illegal and should be taken down. But in others, such as instances of bullying and harassment, or the promotion of self-harm, the content is legal but can cause harm, and users might wish to avoid seeing it.

Reporting potentially harmful content

People continue to play an important role in identifying potentially harmful content, which can then be moderated and labelled as sensitive - particularly where it’s ambiguous and difficult for automated systems to pick up. But despite widespread encountering of potentially harmful content, only two people in 10 say they reported the last piece of potentially harmful content they encountered.

The reasons are complex. Sometimes it’s caused simply by a lack of awareness or knowledge of how to report. That’s why Ofcom launched a social media campaign to raise awareness among young people. Others include the feeling that reporting doesn’t make any difference, or that it’s someone else’s responsibility. But another, deceptively simple, factor is the nudges and prompts to report content that users encounter as they browse. Behavioural research has long established that even small changes to the environment in which we make decisions (the choice architecture) can have surprisingly large effects. We set out to measure the difference they can make to the reporting of harmful content.

The set-up

In research we carried out last year, we created a mock-up version of a video-sharing platform (VSP) and recruited a representative sample of adult VSP users. We randomised them into groups and asked them to browse six video clips, with half containing neutral content and half containing potentially harmful content. Safeguards were used to protect participants. Each group had the same user experience apart from one tweak to the options for reporting:

Control: the reporting option was located behind the ellipsis (…) on the main functions bar. This is a design feature that is widely used by VSPs.

Salience: the reporting option was moved from behind the ellipsis to a flag on the main options bar. Our attention is drawn to what is noticeable. Increasing salience could trigger more reporting.

Salience plus active prompt: an additional prompt when a participant commented on or disliked the content. The theory here is that a proportion of users who find content offensive might dislike or comment instead of reporting. A small nudge might prompt them to submit a report.

Figure 1: Salience: The mock-up VSP platform with the reporting flag added

Figure 2: ‘Active prompt’ for those in the salience plus active prompt group who click ‘dislike’. A similar prompt was given to those who commented.

What we found: high engagement, low reporting

The first thing that stood out was that – even though they’re browsing on an artificial platform – participants were busy. Up to 30% clicked ‘like’ for neutral videos and up to 27% clicked ‘dislike’ for the potentially harmful videos. This is reassuring, as it shows participants were engaged and interacting.

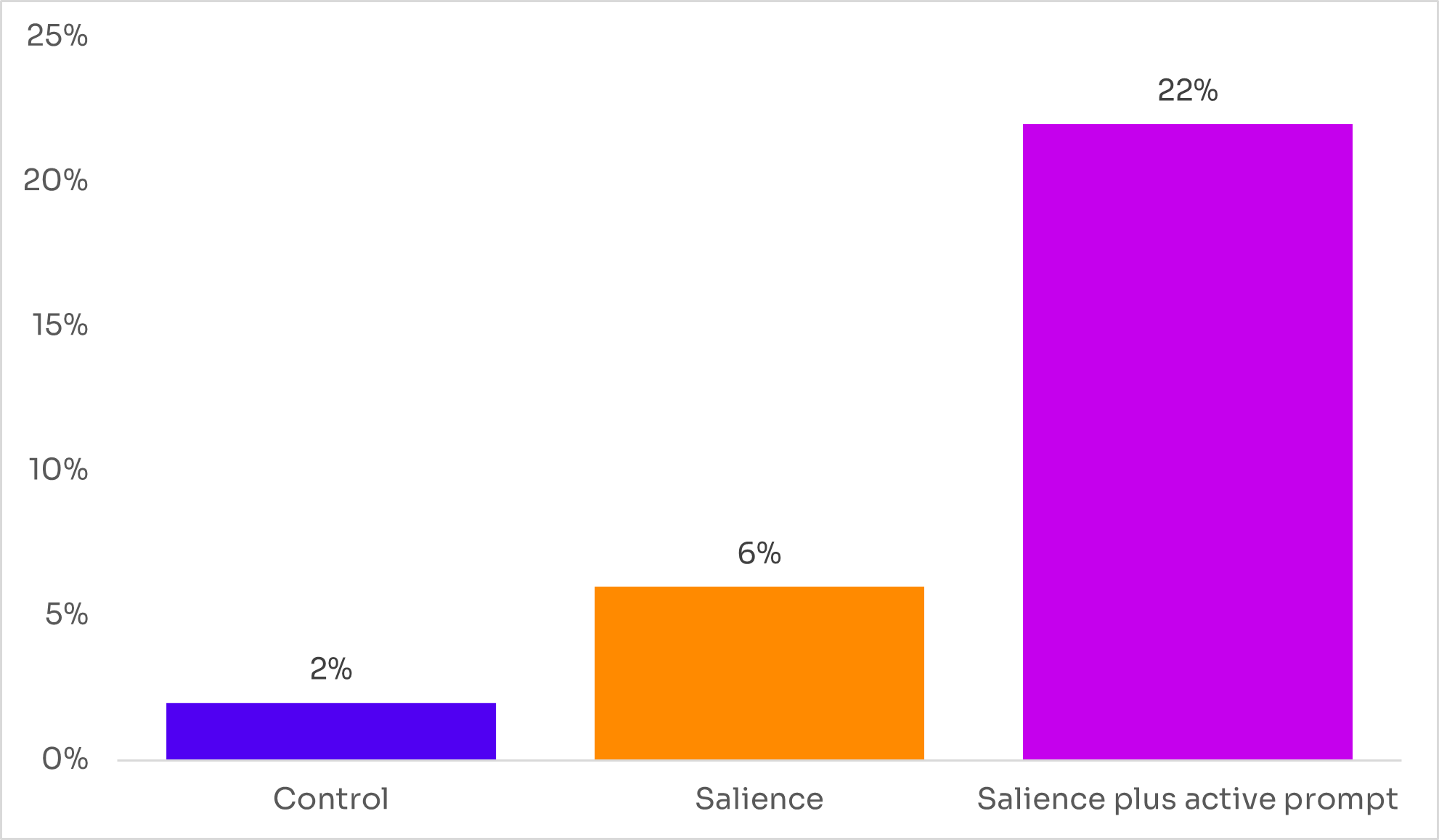

The second notable finding was that when the reporting function was behind an ellipsis, 98% of participants didn’t do any reporting at all. They might have been busy interacting, but they weren’t busy reporting.

And this makes the impact of the nudges striking. Simply inserting a reporting flag in the main options bar caused a threefold increase in the number of participants reporting - from 2% to 6%. A small change in prominence caused a worthwhile lift in activity.

But that impact is dwarfed by the effect of the active prompt, which transformed the number of participants reporting from 2% to 22%.

The prompt deploys a number of behavioural insight techniques – it’s targeted (it only shows for those commenting or disliking, who are likely to be more open to reporting); and it’s timely (unlike campaigns to encourage reporting, it reaches people in the moment at which they’re making a decision). Nevertheless, the scale of impact is higher than we had expected. Interestingly though, we didn’t see a drop off in the accuracy of reports – so the increase in reporting didn’t come at the cost of things like reports of neutral material.

Figure 3: Bar chart showing the percentage of potentially harmful content videos reported

Experiment vs real world

It’s important to note that the scale of the effect could be influenced by social desirability bias – participants might perform the socially desirable action (which in this case is reporting) because of a sense that it’s what they should do while they are taking part in an experiment.

Additionally, the experiment doesn’t test the impact of these nudges over time. Seeing the prompt repeatedly could embed new reporting behaviour for participants, but it will also reduce its salience and potentially its impact. This matters because so much behaviour on VSPs isn’t one-off. It’s often repeated multiple times a day.

To disrupt or not to disrupt?

While it’s unwise to extrapolate from these results to longer-term impact, the trial does give us important insights into shorter-term behaviour. Small design changes like increasing the salience of the reporting option can have a significant effect on the reports made, and importantly, do so with minimal disruption to the user experience. The addition of a flag doesn’t actively divert users from browsing, but the increase in prominence does trigger additional reporting.

The active prompt nudge, though more effective, is undoubtedly more intrusive. Overuse of pop-ups is well documented and they do interrupt the user experience. However, they are already being deployed by social media platforms to improve online safety – like Twitter’s prompt to reconsider Tweet replies containing harmful language. And intelligent design means not all users are exposed to them, only those for whom they are most relevant - in this case those who are disliking or commenting.

Excessive use of active prompts is likely to decrease their salience and impact. But this research suggests they can be an effective tool. The best way to utilise this power might be to target their use at parts of the online world with riskier content, for example, or those used by more vulnerable users, such as under-18s.

Who to nudge, when to nudge

What we have learned is perhaps reassuring. Our experiments suggest that even very low rates of reporting can be changed with small changes to choice architecture. And active, targeted prompts are a promising avenue for safety measures that can encourage desirable behaviours - without bombarding everyone.

This piece was written by Rupert Gill, based on research by Jonathan Porter, Alex Jenkins, John Ivory and Rupert Gill

Disclaimer: The analyses, opinions and findings in this article should not be interpreted as an official position of Ofcom. Ofcom’s Behavioural Insight blogs are written as points of interest. They are not intended to be an official statement of Ofcom's policy or thinking.