Today, the Online Safety Act received Royal Assent, meaning Ofcom’s powers as online safety regulator have officially commenced.

We are now moving quickly to implement the new laws and have today published our regulatory approach and timelines. We also set out how we will drive change in line the with aims of the Act and support services to comply with their new legal obligations.

The Online Safety Act makes companies that operate a wide range of popular online services legally responsible for keeping people, especially children, safe online. Services must do this by assessing and managing safety risks arising from content and conduct on their sites and apps.

Services in scope of the new rules include user-to-user services such as social media photo and video-sharing services, chat and instant messaging platforms, online and mobile gaming, as well as search services and pornography sites.

Ofcom's role

Our focus will be on the services and features that pose the greatest risk of harm to UK users. This will involve ongoing regulatory supervision of the largest and riskiest services.

Importantly, we will not be instructing firms to remove particular pieces of content or take down specific accounts. Instead, we will be tackling the root causes of online content that is illegal and harmful for children, by seeking systemic improvements across the industry – thereby reducing risk at scale, rather than focusing on individual instances.

We will need to strike an appropriate balance, intervening to protect users from harm where necessary, while ensuring that regulation appropriately protects privacy and freedom of expression, and promotes innovation.

How we will drive change

We expect industry to work with us and we expect change. Specifically, we will be focused on ensuring that tech firms:

- have stronger safety governance, so that user safety is represented at all levels of the organisation – from the Board down to product and engineering teams;

- design and operate their services with safety in mind;

- enable users to have more choice and control over their online experience; and

- are more transparent about their safety measures and decision-making to promote trust.

Where we identify compliance failures, we can impose fines of up to £18m or 10% of qualifying worldwide revenue (whichever is greater).

These new laws give Ofcom the power to start making a real difference in creating a safer life online for children and adults in the UK. We've already trained and hired expert teams with experience across the online sector, and today we're setting out a clear timeline for holding tech firms to account.

Ofcom is not a censor, and our new powers are not about taking content down. Our job is to tackle the root causes of harm. We will set new standards online, making sure sites and apps are safer by design. Importantly, we'll also take full account of people's rights to privacy and freedom of expression. We know a safer life online cannot be achieved overnight; but Ofcom is ready to meet the scale and urgency of the challenge.

Dame Melanie Dawes, Ofcom's Chief Executive

Implementation roadmap

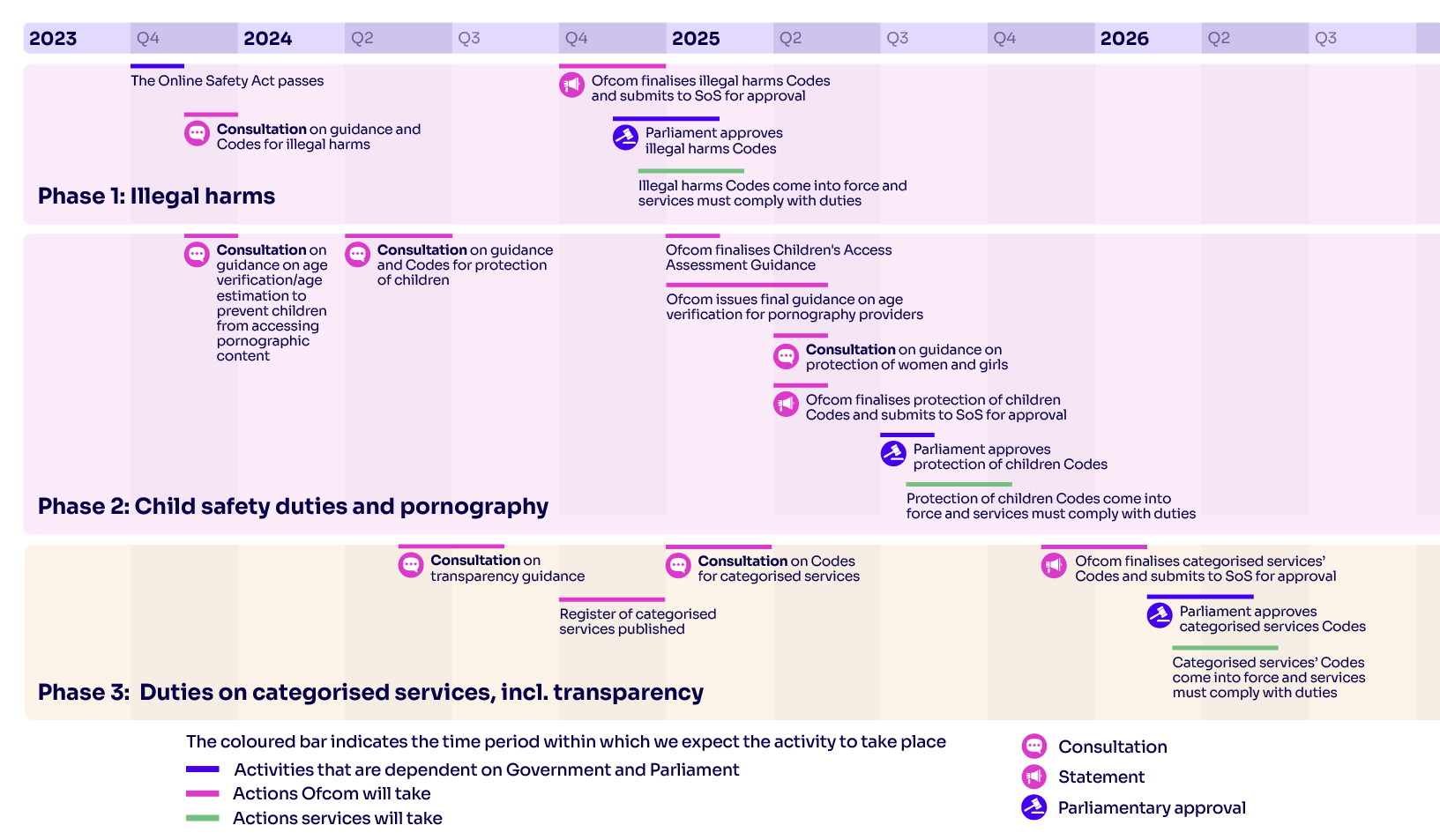

The new laws will roll out in three phases as follows, with the timing driven by the requirements of the Act and relevant secondary legislation:

Phase one: Illegal content

We are publishing our first consultation on illegal harms – including child sexual abuse material, terrorist content and fraud – on 9 November 2023. This will contain proposals for how services can comply with the illegal content safety duties and draft codes of practice.

Phase two: Child safety, pornography, and protecting women and girls

Our first consultation, due in December 2023, will set out draft guidance for services that host pornographic content. Further consultations relating to the child safety duties under the Act will follow in Spring 2024, while we will publish draft guidance on protecting women and girls by Spring 2025.

Phase three: Additional duties for categorised services

These duties – including to publish transparency reports and to deploy user empowerment measures – apply to service which meet certain criteria related to their number of users or high-risk features of their service. We will publish advice to the Secretary of State regarding categorisation, and draft guidance on our approach to transparency reporting, in Spring 2024.

Subject to the introduction of secondary legislation setting the thresholds for categorisation, we expect to publish a register of categorised services by the end of 2024.

Further proposals, including a draft code of practice on fraudulent advertising and transparency notices will follow in early and mid-2025 respectively, with our final codes and guidance published around the end that same year.

More detailed information (PDF, 1.3 MB) about the different implementation phases is available.