- People need advanced reading skills to understand UK VSPs’ terms and conditions, meaning they’re not suitable for many users, including children

- Some VSPs are not clear enough about what content is and isn't allowed, or the consequences users face if they break the rules

- Content moderators don’t always get the guidance and training they need to understand and properly enforce the rules

- VSPs can adopt good practices to drive consistent standards across the industry

Today’s report Regulating Video-Sharing Platforms (VSPs) lifts the lid, for the first time, on how easy it is for people to access, use and understand the terms and conditions set by six platforms: BitChute, Brand New Tube, OnlyFans, Snapchat, TikTok and Twitch.[1]

It also scrutinises how these VSPs communicate what content is and isn’t allowed on their platforms to users and the penalties for breaking the rules – as well as the guidance and training given to staff tasked with moderating content and enforcement.

Ofcom’s study finds that the terms and conditions of VSPs can take a long time to read and require advanced reading skills to understand. This degree of complexity means they’re unsuitable for many users, including children.

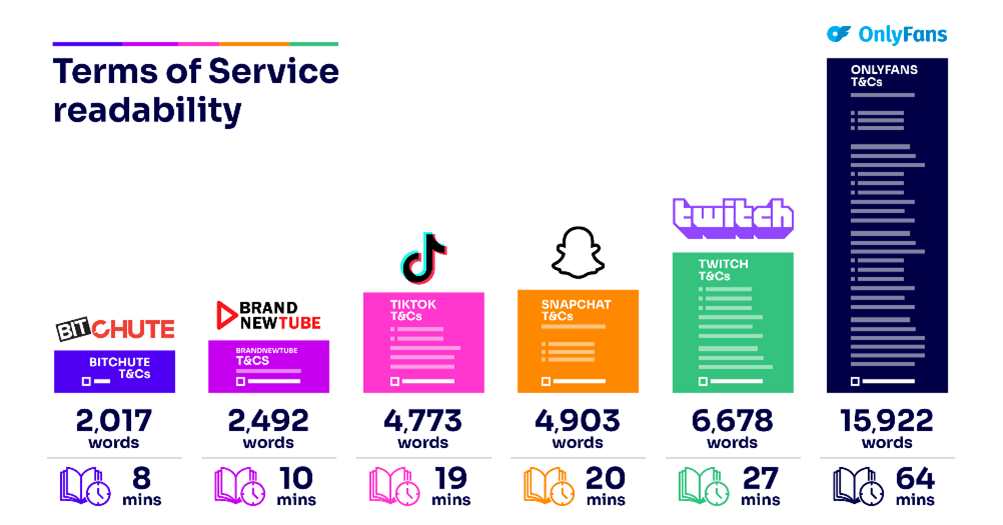

At nearly 16,000 words, OnlyFans had the longest terms of service,[2] which would take its adult users over an hour to read. This was followed by Twitch (27 minutes, 6,678 words), Snapchat (20 minutes, 4,903 words), TikTok (19 minutes, 4,773 words), Brand New Tube (10 minutes, 2,492 words) and BitChute (8 minutes, 2,017 words).

Ofcom calculated a 'reading ease’ score for each platform’s terms of service. All but one was assessed as being “difficult to read and best understood by high-school graduates.”[3] Twitch’s terms were found to be the most difficult to read. TikTok was the only platform with terms of service that were likely to be understood by users without a high school or university education. That said, the reading level required was still higher than that of the youngest users permitted on the platform.

Ofcom’s report also found that Snapchat, TikTok and BitChute use “click wrap” agreements – where platforms make acceptance of the Terms of Service implicit in the act of signing up. Users are not prompted or encouraged to access the Terms of Service and so it makes it easier to agree to them without actually opening or reading them.

The six platforms’ community guidelines - which usually set out the rules for using the service in more user-friendly language - were typically shorter than the terms of service, taking between four and 11 minutes to read. Snapchat had the shortest community guidelines, taking four minutes to read. However, the language used meant it had the poorest reading-ease score and would likely require a university-level education to understand.

Room for improvement

Our study also identified several other areas where VSPs can learn lessons and take steps to improve. In particular, we found that:

- users may not fully understand what content is and isn’t allowed on some VSPs. VSPs’ terms and conditions do include rules regarding harmful material that should be restricted for children, but several aren’t clear about exceptions to these rules.[4] OnlyFans and Snapchat provide little detail to users about prohibited content.

- users may be unlikely to fully understand what the consequences are of breaking VSPs’ rules. While TikTok and Twitch have dedicated pages providing detailed information on the penalties they impose for breaking their rules, other providers offer users little information on the actions moderators may take.We also found inconsistencies between what Brand New Tube’s terms and conditions for users say about different types of harmful content, and their internal guidance for moderators.

- content moderators do not always have sufficient internal guidance and training on how to enforce their terms and conditions. The quality of internal resources and training for moderators varies significantly between VSPs, and few provide specific guidance on what to do in a crisis situation.[5]

Good practice examples for VSPs to learn from

The report also highlights many examples of industry good practice. These include:

- Terms and conditions that list a wider range of content that might be considered harmful to children. TikTok’s, Snapchat’s and Twitch’s terms and conditions all cover a broad range of different types of content that may cause harm to children.

- Terms and conditions explain to users what happens when rules are broken: Twitch and TikTok both have external pages containing detailed information about their penalties, enforcements and banning policies.

- Where VSP providers test the effectiveness of their guidance for moderators: Policy changes at TikTok are tested in a simulated testing environment. Snapchat analyses moderators’ performances to test the effectiveness of internal policies and guidance.

We will continue to work with platforms to drive improvements as part of our ongoing engagement.

Our regulation of VSPs[6] is important in informing our broader online safety regulatory approach under the Online Safety Bill, which we expect to receive Royal Assent later this year.

Today’s report is the first in a series that we will publish over 2023, to include a report on VSPs’ approach to protecting children from harm.

Jessica Zucker, Online Safety Policy Director at Ofcom, said: “Terms and conditions are fundamental to protecting people, including children, from harm when using social video sites and apps. That’s because the reporting of potentially harmful videos - and effective moderation of that content - can only work if there are clear and unambiguous rules underpinning the process.

“Our report found that lengthy, impenetrable and, in some cases, inconsistent terms drawn up by some UK video-sharing platforms risk leaving users and moderators in the dark. So today we’re calling on platforms to make improvements, taking account of industry good practice highlighted in our report.”

Notes to editors:

- Terms and conditions refer to VSPs’ community Guidelines and terms of service, which are publicly available to users. Community guidelines usually set out in more user-friendly language the rules for using the service. Terms of service are usually a legal agreement to which users must consent to use the service.

- OnlyFans is a subscription service specialising in adult content, and we recognise that more information could be needed in their terms of service - for example, about payment terms and age verification.

- The Flesch-Kincaid calculator creates a score based on the average number of words per sentence and average number of syllables per word, with a lower score denoting a greater reading difficulty. 0 – 30 = ‘Very difficult to read and best understood by university graduates’; 30-50 = ‘Difficult to read and best understood by high school graduates’; 50-60 = ‘fairly difficult to read’; 60-70 = ‘easily understood by 13- to 15-year-old students’

- By ‘exceptions’, for example, all platforms restrict under 18s from accessing sexually explicit content. However, they also all make exceptions for nudity in certain non-sexual contexts, including for: reproductive and sexual health content; regional exceptions for body exposure in limited situations, such as common cultural practices; and depictions of nudity in certain non-sexual contexts including breastfeeding. Other depictions of nudity in certain non-sexual contexts may also be permitted.

- Examples of crisis situations could be content that poses an imminent threat to human life.

- In November 2020, Ofcom was appointed as the regulator for video-sharing platforms (VSPs) established in the UK. The Communications Act 2003 lists measures that VSP providers must take, as appropriate, to protect users from relevant harmful material and under-18s from restricted material. Where a VSP provider takes a measure, it must be implemented it in a way that achieves the protection for which it was intended. Ofcom’s published guidance explains that we consider it unlikely that effective protection of users can be achieved without having terms and conditions in place and without these being effectively implemented.