- Ofcom sets out first steps tech firms can take to create a safer life online

- Children are key priority, with measures proposed to tackle child sexual abuse and grooming, and pro-suicide content

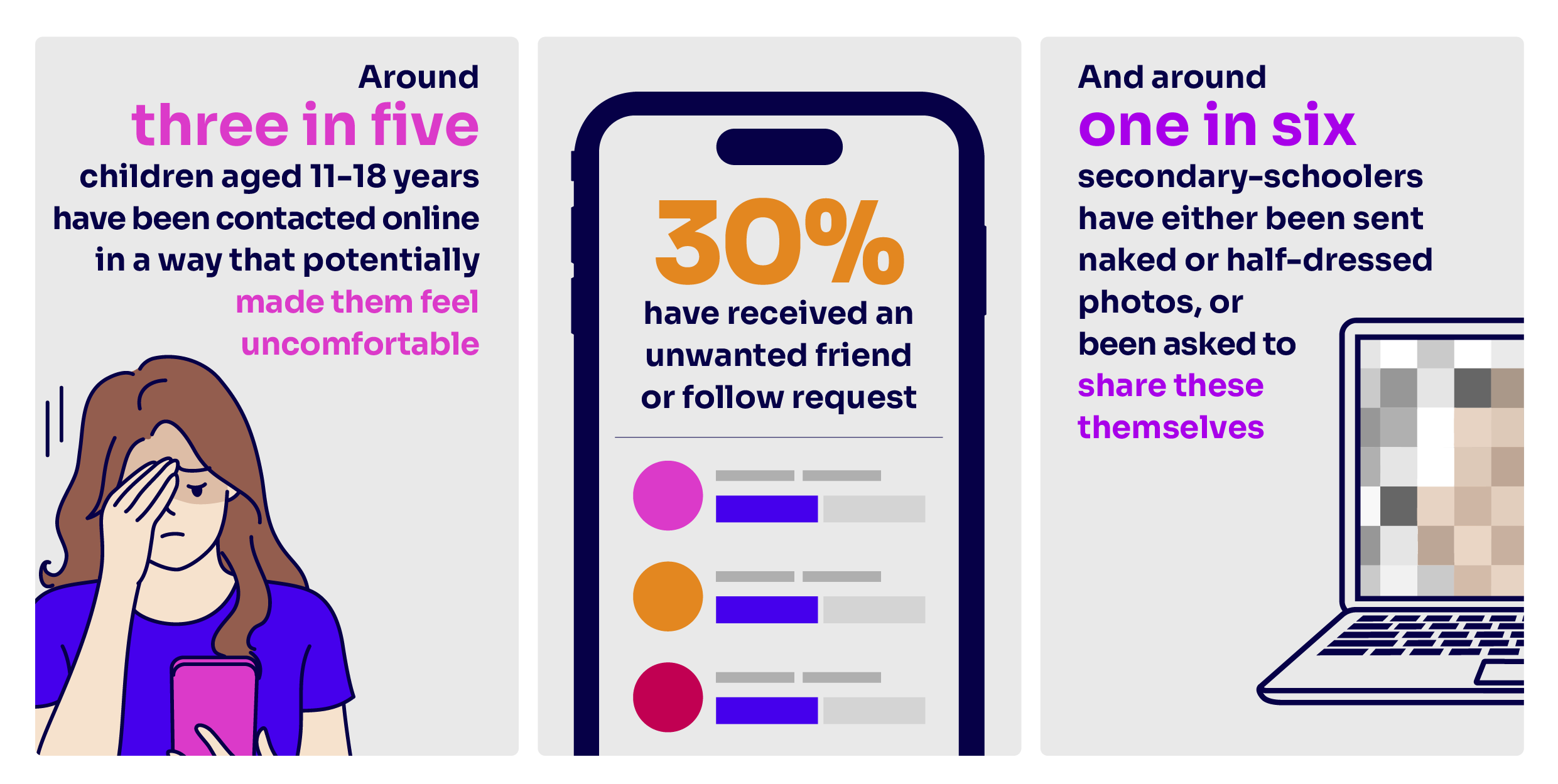

- New research reveals children’s experiences of potentially unwanted contact online

- Tech companies will also need to address fraud and terrorist content

Tech firms must use a range of measures to protect their users from illegal content online – from child sexual abuse material and grooming to fraud – under detailed plans set out by the new online safety regulator today.

Ofcom is exercising its new powers to release draft Codes of Practice that social media, gaming, pornography, search and sharing sites can follow to meet their duties under the Online Safety Act, which came into law last month.[1]

Ofcom’s role will be to force firms to tackle the causes of online harm by making their services fundamentally safer. Our powers will not involve us making decisions about individual videos, posts, messages or accounts, or responding to individual complaints.

Firms will be required to assess the risk of users being harmed by illegal content on their platform, and take appropriate steps to protect them from it. There is a particular focus on ‘priority offences’ set out in the legislation, such as child abuse, grooming and encouraging suicide; but it could be any illegal content.[2]

Regulation is here, and we’re wasting no time in setting out how we expect tech firms to protect people from illegal harm online, while upholding freedom of expression. Children have told us about the dangers they face, and we’re determined to create a safer life online for young people in particular.

Dame Melanie Dawes, Ofcom's Chief Executive

Combatting child sexual abuse and grooming

Protecting children will be Ofcom’s first priority as the online safety regulator. Scattergun friend requests are frequently used by adults looking to groom children for the purposes of sexual abuse. Our new research, published today, sets out the scale and nature of children’s experiences of potentially unwanted and inappropriate contact online.

Three in five secondary-school-aged children (11-18 years) have been contacted online in a way that potentially made them feel uncomfortable. Some 30% have received an unwanted friend or follow request. And around one in six secondary-schoolers (16%) have either been sent naked or half-dressed photos, or been asked to share these themselves.[3]

Our figures show that most secondary-school children have been contacted online in a way that potentially makes them feel uncomfortable. For many, it happens repeatedly. If these unwanted approaches occurred so often in the outside world, most parents would hardly want their children to leave the house. Yet somehow, in the online space, they have become almost routine. That cannot continue.

Dame Melanie

Given the range and diversity of services in scope of the new laws, we are not taking a ‘one size fits all’ approach. We are proposing some measures for all services in scope, and other measures that depend on the risks the service has identified in its illegal content risk assessment and the size of the service.

Under our draft codes published today, larger and higher-risk services[4] should ensure that, by default:

- Children are not presented with lists of suggested friends;

- Children do not appear in other users’ lists of suggested friends;

- Children are not visible in other users’ connection lists;

- Children’s connection lists are not visible to other users;

- Accounts outside a child’s connection list cannot send them direct messages;[5] and

- Children’s location information is not visible to any other users.

We are also proposing that larger and higher-risk services[4] should:

- use a technology called ‘hash matching’ – which is a way of identifying illegal images of child sexual abuse by matching them to a database of illegal images, to help detect and remove child sexual abuse material (CSAM) circulating online;[6] and

- use automated tools to detect URLs that have been identified as hosting CSAM.

All large general search services should provide crisis prevention information in response to search requests regarding suicide and queries seeking specific, practical or instructive information regarding suicide methods.

In the five years it has taken to get the Online Safety Act onto the statute book grooming crimes against children on social media have increased by a staggering 82%.

That is why Ofcom’s focus on tackling online grooming is so welcome, with this code of practice outlining the minimum measures companies should be taking to better protect children.

We look forward to working with Ofcom to ensure these initial codes help to build bold and ambitious regulation that listens to the voices of children and responds to their experiences in order to keep them safe.

Sir Peter Wanless, NSPCC Chief Executive

We stand ready to work with Ofcom, and with companies looking to do the right thing to comply with the new laws.

It’s right that protecting children and ensuring the spread of child sexual abuse imagery is stopped is top of the agenda. It’s vital companies are proactive in assessing and understanding the potential risks on their platforms, and taking steps to make sure safety is designed in.

Making the internet safer does not end with this Bill becoming an Act. The scale of child sexual abuse, and the harms children are exposed to online, have escalated in the years this legislation has been going through Parliament.

Companies in scope of the regulations now have a huge opportunity to be part of a real step forward in terms of child safety.

Susie Hargreaves OBE, Chief Executive of the Internet Watch Foundation

Today marks a crucial first step in making the Online Safety Act a reality, cleaning up the wild west of social media and making the UK the safest place in the world to be online.

Before the Bill became law, we worked with Ofcom to make sure they could act swiftly to tackle the most harmful illegal content first. By working with companies to set out how they can comply with these duties, the first of their kind anywhere in the world, the process of implementation starts today.

Michelle Donelan, Science, Innovation and Technology Secretary

Fighting fraud and terrorism

Today’s draft codes also propose targeted steps to combat fraud and terrorism. Among the measures for large higher-risk services are:

- Automatic detection. Services should deploy keyword detection to find and remove posts linked to the sale of stolen credentials, such as credit card details. This should help prevent attempts to commit fraud. Certain services should have dedicated fraud reporting channels for trusted authorities, allowing it to be addressed faster.

- Verifying accounts. Services that offer to verify accounts should explain how they do this. This is aimed at reducing people’s exposure to fake accounts, to address the risk of fraud and foreign interference in UK processes such as elections.

All services should block accounts run by proscribed terrorist organisations.

More broadly, we are proposing a core list of measures that services can adopt to mitigate the risk of all types of illegal harm, including:

- Name an accountable person. All services will need to name a person accountable to their most senior governance body for compliance with their illegal content, reporting and complaints duties.

- Teams to tackle content. Making sure their content and search moderation teams are well resourced and trained; set performance targets and monitor their progress against them; and prepare and apply policies for how they prioritise their review of content.

- Easy reporting and blocking. Making sure users can easily report potentially harmful content, make complaints, block other users and disable comments. This can help women avoid harassment, abuse, cyberflashing, stalking, and coercive and controlling behaviour.

- Safety tests for recommender algorithms. When they update these features, which automatically recommend content to their users, services that carry out tests on them must also assess whether those changes risk disseminating illegal content.

Next steps

Over the last three years, Ofcom has been gearing up for its new role by assembling a world-class team, led by Gill Whitehead. We have also been carrying out an extensive programme of research, engaging with industry, collecting evidence to inform our Codes and guidance, building relationships with other regulators in the UK and overseas, and regulating video-sharing platforms.

Today, we have published a set of draft instruments which, once finalised, will form the basis of pioneering online safety regulation in the UK. As well as the Codes of Practice for online services, these include guidance and registers relating to risk, record keeping and enforcement.

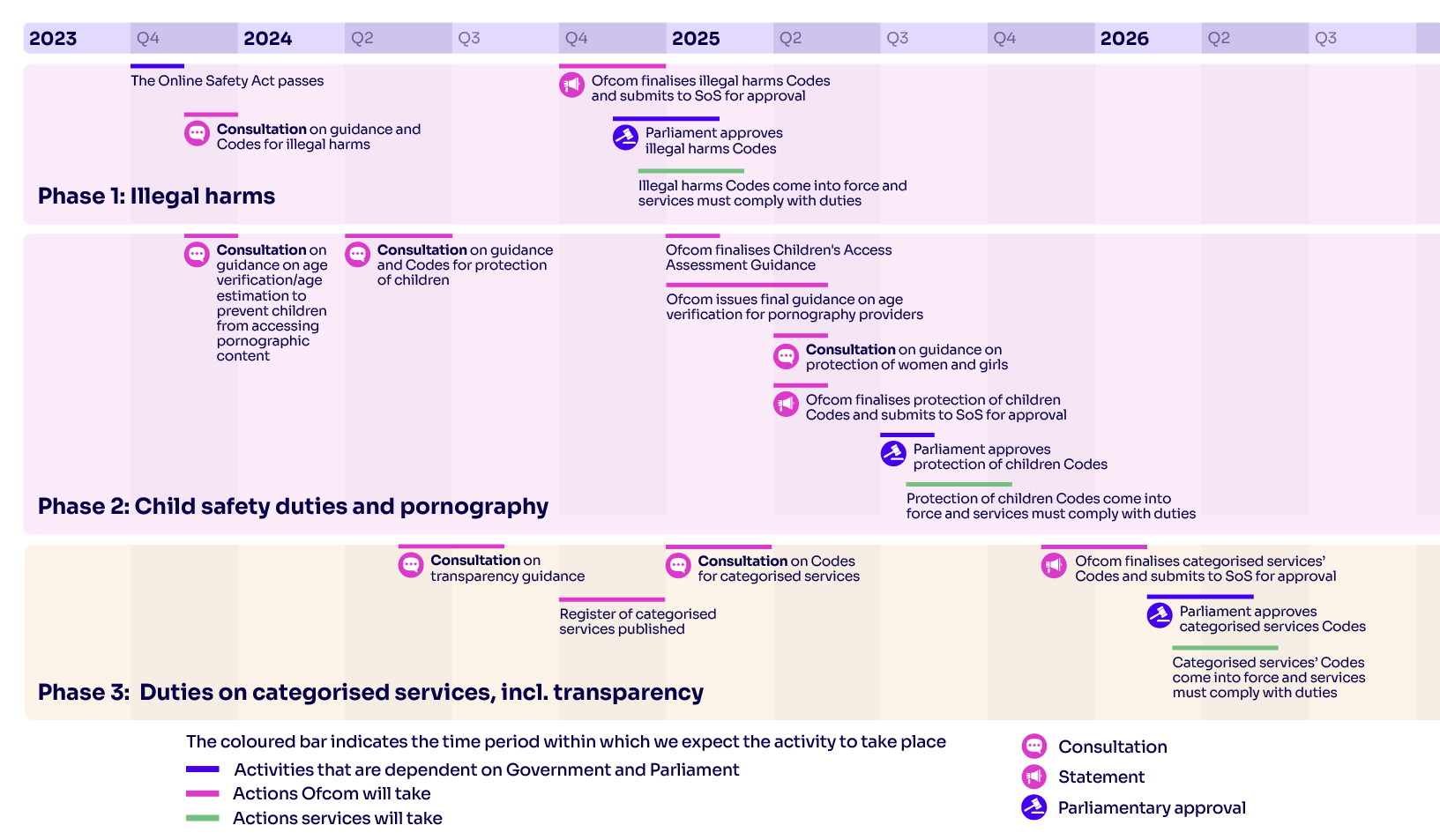

We are now consulting on these detailed documents, hearing from industry and a range of experts as we develop long-term, final versions that we intend to publish in autumn next year.

Services will then have three months to conduct their risk assessment, while Ofcom’s final Codes of Practice will be subject to Parliamentary approval. We expect this to conclude by the end of next year, at which point the Codes will come into force and we can begin enforcing the regime. Companies who fall short will face enforcement action, including possible fines.

Fully implementing the new online safety laws will involve several phases, including:

- Later this year, we will propose guidance on how adult sites should comply with their duty to ensure children cannot access pornographic content.

- In spring 2024, we will publish a consultation on additional protections for children from harmful content promoting, among other things – suicide, self-harm, eating disorders and cyberbullying.

Notes to editors

- The codes of practice are not prescriptive or exhaustive. Companies can choose a different approach to meeting their duties, depending on the nature of their service and the technology they want to deploy. But firms that implement our Codes will know they are compliant with their safety duties.

- Priority offences set out in the Act include:

- child sexual abuse and grooming;

- encouraging or assisting suicide or serious self-harm;

- harassment, stalking, threats and abuse;

- controlling or coercive behaviour;

- intimate image abuse;

- sexual exploitation of adults;

- unlawful immigration and human trafficking;

- supplying drugs, psychoactive substances, firearms and other weapons;

- extreme pornographic content;

- terrorism and hate speech;

- fraud; and

- foreign Interference.

- BMG surveyed 2,031 children aged 11-18 in the UK between 7th and 22nd December 2022. Quotas were set to ensure a representative sample of the UK population aged 11-18. Results are weighted by age and gender by nation, region, ethnicity and socio-economic grade.

- These proposals apply to user-to-user services with a high risk of the relevant harm, and large user-to-user services with at least a medium risk of the relevant harm. More details on the services to which these proposals apply are included in today’s consultation.

- For services with no formal connection features, they must implement mechanisms to ensure child users do not receive unsolicited direct messages.

- This proposal will only apply to public communications, where it is technically feasible for a service to use this technology. Consistent with the restrictions the Act, they do not apply to private communications or end-to-end encrypted communications. However, end-to-end encrypted services are still subject to all the safety duties set out in the Act and will still need to take steps to mitigate risks of CSAM on their service.