- Ofcom sets out guidance to help services protect against harmful online videos

- Regulation should protect users while upholding freedom of expression

A third of people who use online video-sharing services have come across hateful content in the last three months, according to a new study by Ofcom.

The news comes as Ofcom proposes new guidance for sites and apps known as ‘video-sharing platforms’ (VSPs), setting out practical steps to protect users from harmful material.

VSPs are a type of online video service where users can upload and share videos with other members of the public. They allow people to engage with a wide range of content and social features.

Under laws introduced by Parliament last year, VSPs established in the UK must take measures to protect under-18s from potentially harmful video content; and all users from videos likely to incite violence or hatred, as well as certain types of criminal content.[1] Ofcom’s job is to enforce these rules and hold VSPs to account.

Today’s draft guidance is designed to help these companies understand what is expected of them under the new rules, and to explain how they might meet their obligations in relation to protecting users from harm.

Harmful experiences uncovered

To inform our approach, Ofcom has researched how people in the UK use VSPs, and their claimed exposure to potentially harmful content.[2] Our major findings are:

- Hate speech. A third of users (32%) say they have witnessed or experienced hateful content.[3] Hateful content was most often directed towards a racial group (59%), followed by religious groups (28%), transgender people (25%) and those of a particular sexual orientation (23%).

- Bullying, abuse and violence. A quarter (26%) of users claim to have been exposed to bullying, abusive behaviour and threats, and the same proportion came across violent or disturbing content.

- Racist content. One in five users (21%) say they witnessed or experienced racist content, with levels of exposure higher among users from minority ethnic backgrounds (40%), compared to users from a white background (19%).

- Most users encounter potentially harmful videos of some sort. Most VSP users (70%) say they have been exposed to a potentially harmful experience in the last three months, rising to 79% among 13-17 year-olds.[4]

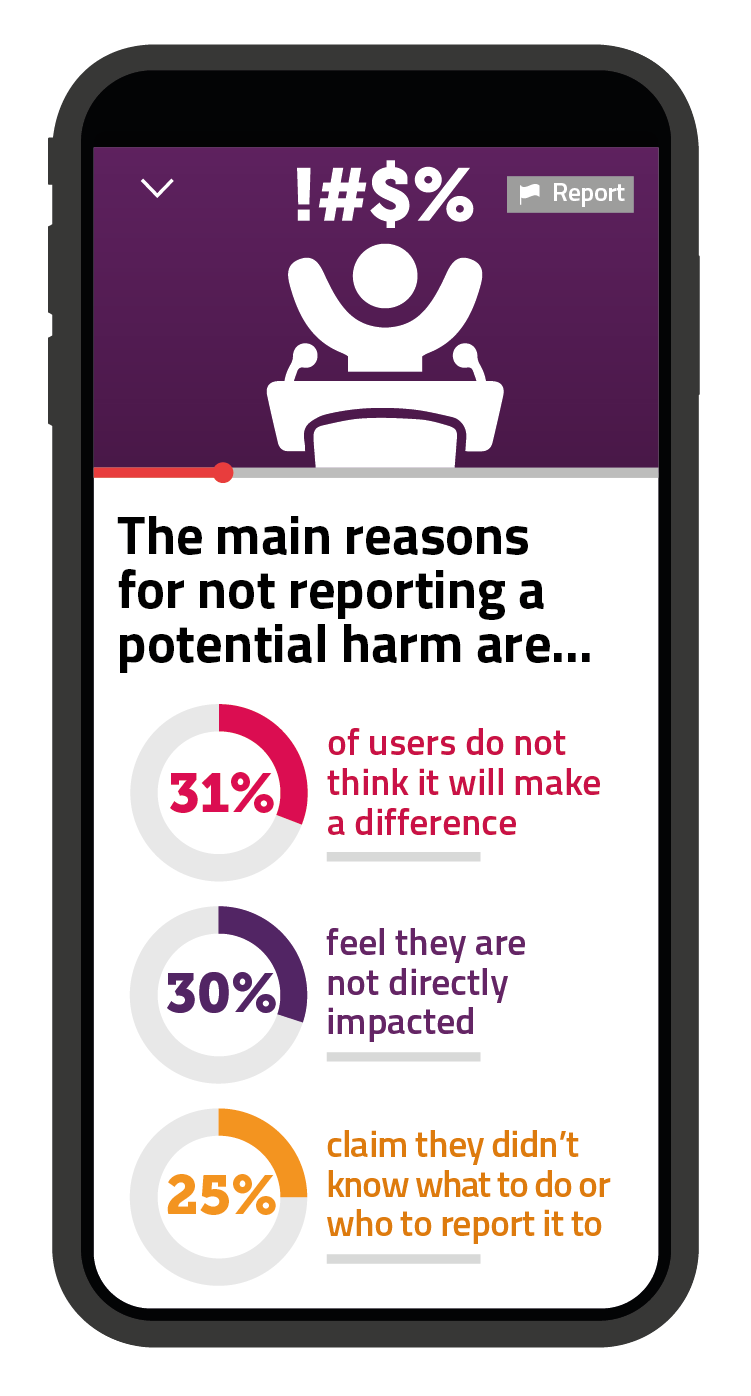

- Low awareness of safety measures. Six in 10 VSP users are unaware of platforms’ safety and protection measures, while only a quarter have ever flagged or reported harmful content.

We have also published two research reports from leading academics from The Alan Turing Institute, covering online hate; and from the Institute of Connected Communities at the University of East London, on protection of minors online.

Guidance for protecting users

As Ofcom begins its new role regulating video-sharing platforms, we recognise that the online world is different to other regulated sectors. Reflecting the nature of video-sharing platforms, the new laws in this area focus on measures providers must consider taking to protect their users – and they afford companies flexibility in how they do that.

The massive volume of online content means it is impossible to prevent every instance of harm. Instead, we expect VSPs to take active measures against harmful material on their platforms. Ofcom’s new guidance is designed to assist them in making judgements about how best to protect their users. In line with the legislation, our guidance proposes that all video-sharing platforms should provide:

- Clear rules around uploading content. VSPs should have clear, visible terms and conditions which prohibit users from uploading the types of harmful content set out in law. These should be enforced effectively.

- Easy flagging and complaints for users. Companies should implement tools that allow users to quickly and effectively report or flag harmful videos, signpost how quickly they will respond, and be open about any action taken. Providers should offer a route for users to formally raise issues or concerns with the platform, and to challenge decisions through dispute resolution. This is vital to protect the rights and interests of users who upload and share content.

- Restricting access to adult sites. VSPs with a high prevalence of pornographic material should put in place effective age-verification systems to restrict under-18s’ access to these sites and apps.

Enforcing the rules

Ofcom’s approach to enforcing the new rules will build on our track record of protecting audiences from harm, while upholding freedom of expression. We will consider the unique characteristics of user-generated video content, alongside the rights and interests of users and service providers, and the general public interest.

If we find a VSP provider has breached its obligations to take appropriate measures to protect users, we have the power to investigate and take action against a platform. This could include fines, requiring the provider to take specific action, or – in the most serious cases – suspending or restricting the service.Consistent with our general approach to enforcement, we may, where appropriate, seek to resolve or investigate issues informally first, before taking any formal enforcement action.

Kevin Bakhurst, Ofcom’s Group Director for Broadcasting and Online Content, said: “Sharing videos has never been more popular, something we’ve seen among family and friends during the pandemic. But this type of online content is not without risk, and many people report coming across hateful and potentially harmful material.

“Although video services are making progress in protecting users, there’s much further to go. We’re setting out how companies should work with us to get their houses in order – giving children and other users the protection they need, while maintaining freedom of expression.”

Next steps

We are inviting all interested parties to comment on our proposed draft guidance, particularly services which may fall within scope of the regulation, the wider industry and third-sector bodies. The deadline for responses is 2 June 2021. Subject to feedback, we plan to issue our final guidance later this year. We will also report annually on the steps taken by VSPs to comply with their duties to protect users.

NOTES TO EDITORS

- Ofcom has been given new powers to regulate UK-established VSPs. VSP regulation sets out to protect users of VSP services from specific types of harmful material in videos. Harmful material falls into two broad categories under the VSP Framework, which are defined as:

- Restricted Material, which refers to videos which have or would be likely to be given an R18 certificate, or which have been or would likely be refused a certificate. It also includes other material that might impair the physical, mental or moral development of under-18s.

- Relevant Harmful Material, which refers to any material likely to incite violence or hatred against a group of persons or a member of a group of persons based on particular grounds. It also refers to material the inclusion of which would be a criminal offence under laws relating to terrorism; child sexual abuse material; and racism and xenophobia.

- The VSP consumer research fieldwork was conducted between September and October 2020. The ‘last three months’ refers to the three months prior interview. The research explored a range of websites and apps used by people in the UK to watch and share videos online. The research does not seek to identify which services will fall into Ofcom’s regulatory remit, nor to pre-determine whether any particular service would be classed as a VSP under the regulatory definition. Evidence in this research is self-reported by respondents. As such, the evidence is limited by respondents’ freedom to decide whether to participate, their ability to recall events, accuracy of that recall and which experiences they chose to disclose.

- Figures on hateful content are based on the combined data for exposure to videos or content encouraging hate towards others, videos or content encouraging violence towards others, and videos or content encouraging racism.

- This research question looked at VSP users’ exposure to 26 potential online harms, which capture a broad spectrum of behaviours and content that users may find potentially harmful. These potential harms went beyond the specified categories of harmful material in the VSP legislation. A full breakdown of this research can be found in the User Experience of Potential Online Harms within Video Sharing Platforms Report (PDF, 4.6 MB). The harms are referred to as ‘potential online harm(s)’ because the research did not seek to provide a view on what, if any of these experiences are considered harmful, nor what, if any, harm actually arose from these experiences. VSP providers are not under a regulatory obligation under the VSP Framework to have measures in place relating to any content or conduct outside of the specified areas of harmful material in the legislation.